A central requirement laid out by the African Health Initiative (AHI) is that the efforts of the Population Health Implementation and Training (PHIT) Partnerships to strengthen district health systems be measured by lives saved. While the route to more effective and efficient health systems may be varied and changing, even seemingly amorphous in scope, the goal of saving lives is specific and quantifiable. In this sense, this ultimate intended impact disciplines the intervention: each activity must be scrutinized for the evidence that its impact will contribute to reduced mortality.

The pathway to impact has been a primary focus of implementation research. Interventions that improve health outcomes merit reproduction and scaling up, as well as adoption in other settings. With that said, an impact evaluation may answer the question of whether an intervention worked, but it does not answer the question of how it worked, and why (or why not). Often an intervention approach is comprised of components that have already been subject to randomized trials. We know that oral rehydration, insecticide treated nets and vaccines save lives. The question being asked is whether these components are being successfully delivered, rather than the worthiness of the components themselves.

Assessing the delivery strategy can get tricky, as a number of interdependent events during the implementation process will determine the eventual outcomes. As the PHIT Partnerships began implementation, many modified their strategies to address gaps, obstacles and previously unrecognized issues that were identified once intervention efforts got underway. This iterative process made sense and, in the foundation’s view, showed a responsive team that was attentive to the implementation process. Teams should respond to experience. But the initial designs, on which teams had competed for funding, were now changed. This process of “learning while doing” itself raised a new host of evaluation questions.

Now past the midpoint of implementation of the various PHIT Partnership interventions, AHI will enhance the evaluation of the PHIT Partnerships taking place on a health systems level in five countries in sub-Saharan Africa with an additional focus on understanding the implementation experience.

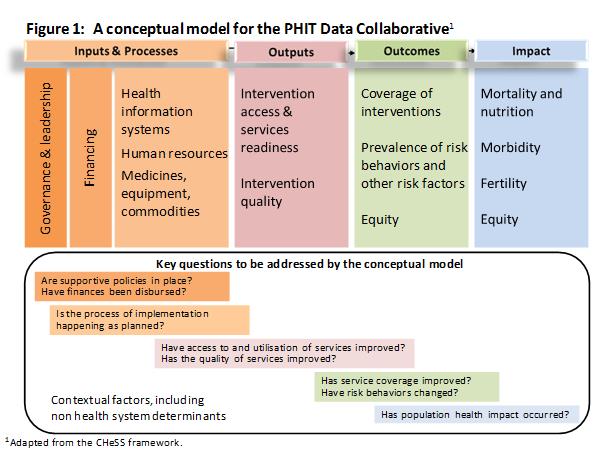

Our effort to understand implementation signifies a departure from the simple linear model used in the impact pathway, shown below at right.

This conceptual model was adopted by the PHIT Data Collaborative as AHI was launched. It guided the selection of metrics (quantifiable indicators) for each component, which are summarized in a white paper, found here. Together, working with the Data Coordinator group (lead by Johns Hopkins researchers Robert Black and Jennifer Bryce), the teams created a set of common indicators for use across Partnership interventions. This model defines four major phases of implementation: inputs and processes, outputs, outcomes, and impact. Using this conceptual model as a framework, the Partnerships would use post hoc analysis to describe a chain of results.

Although just two of the health system approaches underway AHI Partnerships (Tanzania and Zambia) include random assignment of an intervention, randomized controlled trials (RCTs) remain a gold standard for impact assessment. There are limits to the RCT, however, especially when it comes to multicomponent, complex interventions. The insufficiencies of RCTs in health systems strengthening (HSS) interventions are well-described by authors English, Schellenberg, and Todd in their article “Assessing health systems interventions: key points when considering the value of randomization.” HSS interventions, unlike studies testing efficacies on a large population of individuals, involve complex interactions between senders and receivers of information and resources. A key strength of RCTs is the reduction or elimination of bias and confounding. This benefit is of less import when dealing with interventions aimed at a health systems level. Here the complex causal pathways cannot be simplified to reflect a single cause for a specific outcome. Heterogeneity, differences from expected outcomes, in HSS research cannot be accounted for by chance, as they are in RCTs involving individuals grouped into large trial and control groups. The authors observe that when health systems are the subject, “the most informative part of any study will most probably be the attempt to understand such heterogeneity in the hope of uncovering new mechanisms that influence outcomes.” Our experience in monitoring the PHIT Partnerships confirms this observation.

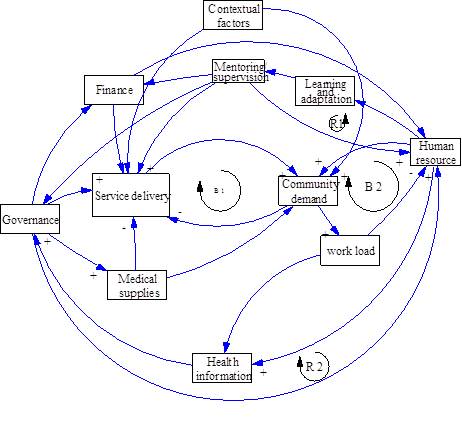

Take the example of the Zambia PHIT Partnership led by the Center for Infectious Disease Research Zambia (CIRDZ) and the University of North Carolina that made improvement of quality of care a central activity. Its impact pathway might be summarized like this:

But when people involved in the intervention were questioned as part of Dr. Wilbroad Mutale‘s research, the far more complex diagram below resulted!

For this reason, two of the PHIT Partnerships – Zambia and Mozambique – have revised a conceptual framework that tracks implementation practice in addition to tracking impact using the former results-chain model. Working with the Health Policy Unit at the University of Cape Town resulted in a revised, more dynamic conceptual framework that includes multidirectional interactions between actors, including external support teams, as well as consequences of implementation, both intended and unintended. The overarching objective of this added research will be to understand better the influence of implementation practice and its relation to the overall impact of the intervention on population health. We know that there are varying factors that shape implementation throughout the course of an intervention. Rather than overlooking these factors, we consider them vital to understanding the progression of implementation, and to repeating the HSS activities currently carried out by the PHIT Partnerships. We hope to document implementation practice and the reasons for applying these approaches; understand the relationships and exchanges between agents as well as the variables influencing these interactions; evaluate the performance of the intervention as a whole; and suggest plausible linkages between implementation practice, intervention implementation, and key outcomes of the intervention.

We have come to recognize that complex health systems need to be met with complex evaluation tools. Therefore, we encourage an expansion to investigation focus, to address the goal of replicability and the longer term goal of scalability. It is our hope that with the completion of this effort, Partnerships can begin to answer important questions that will inform the future of their interventions. How are Partnerships implementing intervention activities to strengthen health systems? Why have they chosen to use these implementation strategies? Why have activities deviated from expected outcomes? Gathering this information can inform future health systems strengthening interventions on appropriate implementation strategies for specific settings.

The challenge of global health requires new knowledge and new techniques, but much of what we already know would go a long way to improving population health—if we can meet the challenge of delivery. To tackle this challenge we need to move beyond indicators to have deeper understanding. We are excited about this additional effort and are hopeful that it results in broader learning for the field.